Testing can be fundamental to providing a reliable experience to your users.

Everyone can take part in the web testing process. It doesn't have to take deep technical knowledge to provide feedback on something that's not behaving correctly or as expected. Unit testing, for example, requires technical knowledge of the codebase, compared to accessibility testing which doesn't. Both of these and more, are described in more detail below.

Testing doesn't only benefit developers and product managers. Testing benefits the entire company. It allows the product team to move faster, with more confidence (who doesn't love a more accurate time estimate?) and ultimately get robust features in front of users faster.

In 2018, Spotify said they focus on testing to "move fast, with confidence that things work". While that seems unobtainable, the types of software testing measures below could help you get there:

UNIT TESTING

A microform of testing, this focuses on components of code at an individual and isolated level. Fundamentally, you have an input, the code you're testing (the unit) and an output. A successful unit test is when the test output is the same as a pre-determined value. Because unit testing often focuses on single and pure functions, they're quick to write and run.

Unit testing is as fundamental for the backend, through frameworks like RSpec, PHPUnit or unittest. In the book Testing Rails, thoughtbot notes their experience on having comprehensive automated tests in Rails "catch[es] bugs sooner, preventing them from ever being deployed."

INTEGRATION TESTING

Integration testing should answer the question 'do these services when connected, run as expected?'. Microservices have seen a relatively recent rise in popularity, meaning there are parts of your application running in isolation and potentially talking to each other. Integration tests ensure this communication works as expected.

Not limited to new approaches like microservices, integration testing is also useful for how your application talks to databases or third parties. A simple example is: 'A user signs up. Are they created in the database?'.

FUNCTIONAL TESTING

Functional testing can be very similar to integration testing but with a business objective in mind. Take the user creation example above - a functional version could be 'when a user signs up, does the response include the newly created user model?'.

END-TO-END TESTING

Think of end-to-end testing as a situation where units are combined and tested as a group. Tests are either run on fragments (components) of an application in isolation, or they are run on an entire user journey. Using Twitter as an example - a component test could be 'Can the user like other users Tweets?' and testing an entire journey could be 'Can the user sign up, create their profile and create their first Tweet?'.

End-to-end tests are usually a procedural list of commands which tell a browser to do something without human control. Common concepts could be 'click the signup button', 'select Australia from the country dropdown' and 'is the cancel button visible?'.

Automating these tests usually requires services like SauceLabs or BrowserStack. These services provide virtual machines, so you can run a queue of instructions in a variety of different ecosystems (from IE9 on Windows to the latest Chrome on Mac OS). This ensures you catch issues in environments that your development team isn't working in.

This form of testing in development has historically been fragile, where the cost of upkeep grows exponentially with the expansion of the team and codebase. However, as tooling gets more advanced, testing gets more reliable. Jason Palmer from Spotify has a great overview of how to diagnose, respond to and prevent Test Flakiness.

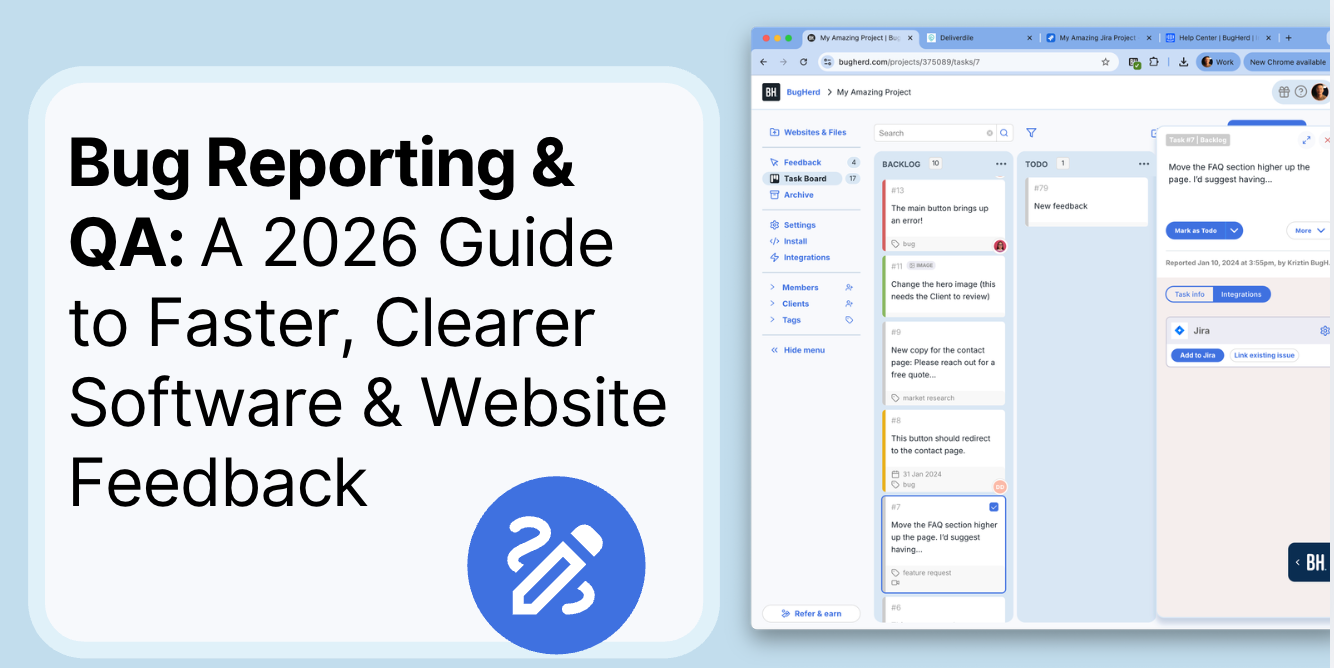

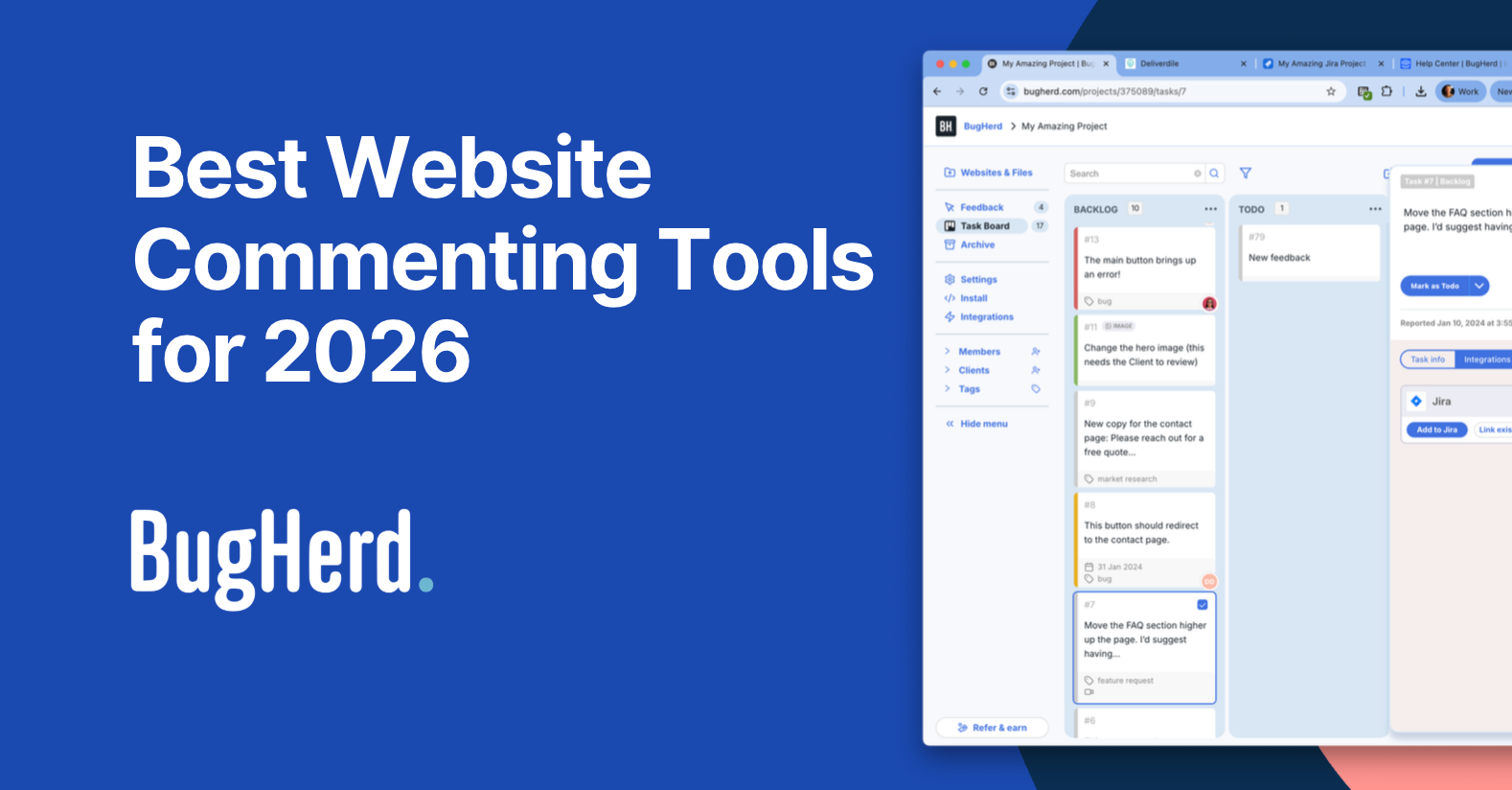

BUGHERD FOR ACCEPTANCE TESTING

Often shortened to UAT (User Acceptance Testing), this is really the final step in signing off on a deliverable. UAT Testing is a cohesive way to ensure the piece of work meets the requirements of the contract (specification) outlined at the start of the development lifecycle. To help you get the best user feedback, we suggest:

- The users doing the testing aren't those involved in developing the work

- Begin the feedback process as early as possible; fixing issues early on often saves time overall

- Have a clear and easy process around collecting, assigning, working on and closing out issues (including firm deadlines on feedback)

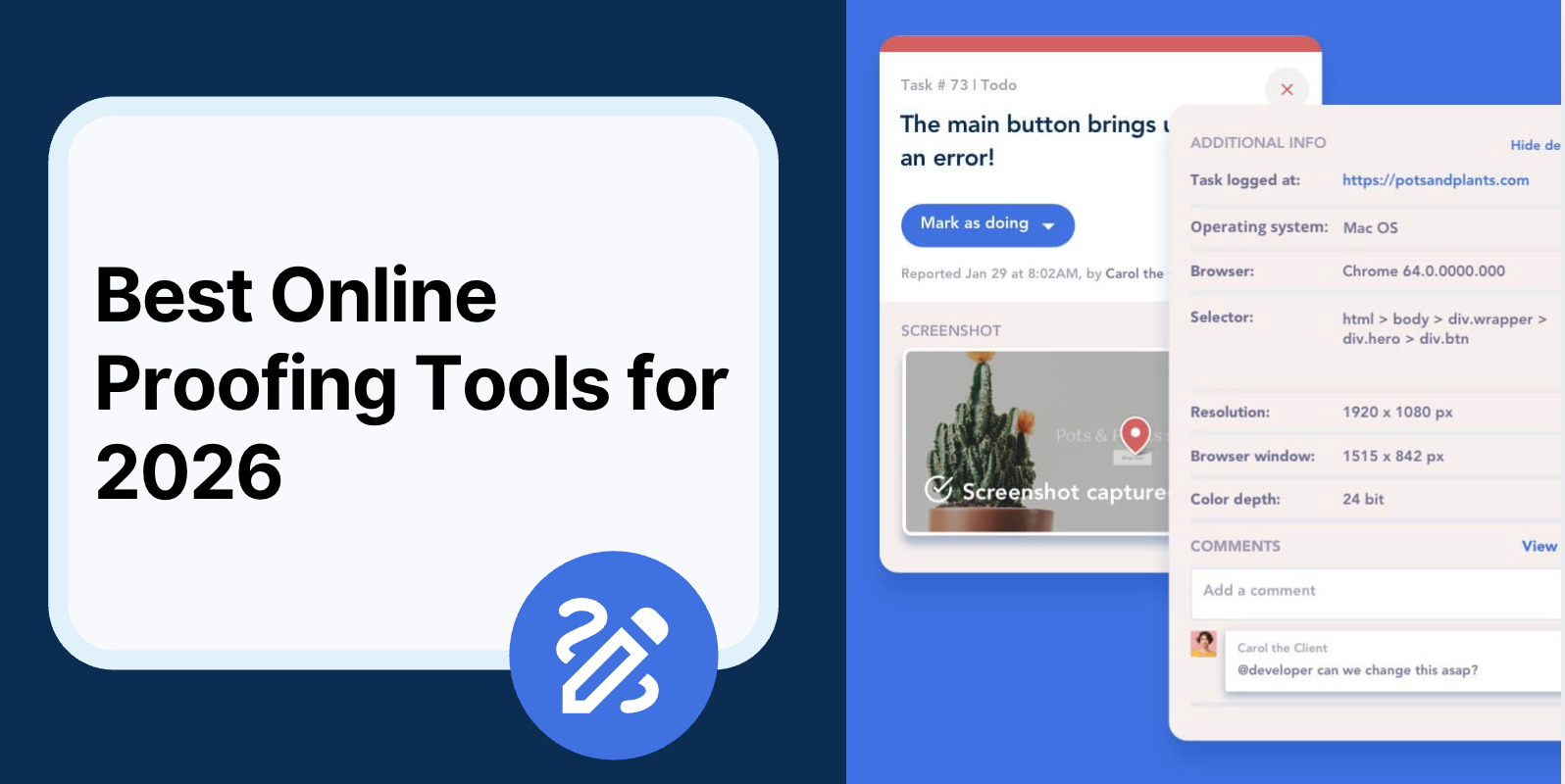

The best tools to collect web feedback are those that provide contextual, visual and actionable feedback with little need to go back and forth questioning it.

For example, BugHerd collects feedback from users via a browser extension. Therefore, feedback is entered directly from the page. Tasks are then sent to a Kanban board for a dev team to manage and work on. Simple!

But don't just take our word for it.

BugHerd is loved by 10,000+ companies,

350,000+ users across 172 countries.

4.8/5

4.7/5

4.5/5

5/5

8.7/10

Sam Duncan 📱📏 🌱

@SamWPaquet

"@bugherd where have you been all my life??

We just migrated our bug tracking over from Asana and have at least halved our software testing time🪳👏📈. "

Ashley Groenveld

Project Manager

“I use BugHerd all day every day. It has sped up our implementation tenfold.”

Sasha Shevelev

Webcoda Co-founder

"Before Bugherd, clients would try to send screenshots with scribbles we couldn't decipher or dozens of emails with issues we were often unable to recreate."

Mark B

Developer

“A no-brainer purchase for any agency or development team.”

Kate L

Director of Operations

"Vital tool for our digital marketing agency.”

Paul Tegall

Delivery Manager

"Loving BugHerd! It's making collecting feedback from non-tech users so much easier."

Daniel Billingham

Senior Product Designer

“The ideal feedback and collaboration tool that supports the needs of clients, designers, project managers, and developers.”

Chris S

CEO & Creative Director

“Our clients LOVE it”

Emily VonSydow

Web Development Director

“BugHerd probably saves us

at least 3-4hrs per week.”